Optimizing the parallelization

To find the optimal parallelization setup of a VASP calculation, it is necessary to run tests for each system, algorithm and computer architecture. Below, we offer general advice on how to optimize the parallelization.

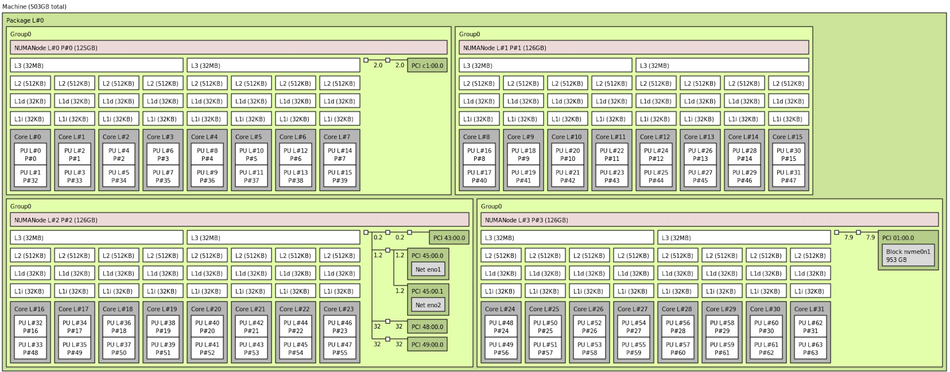

Such optimization of running VASP most efficiently in parallel depends greatly on the hardware architecture, network topology for MPI communication, and compiler and library toolchain. Hence, it is a good start to first get familiar with the computer system you are working on first, i.e. look out for specific documentation of your HPC center for submitting jobs. Keywords to look out for might be: submitting jobs, distribution and binding, MPI rank and thread binding, rank / thread affinity, hybrid MPI and OpenMP etc. Usually, HPC facilities put great work in optimizing the computer system in a very special way and one has to follow their recommendations to get the most out of the compute resources.

Understanding the hardware

Optimizing the parallelization

For repetitive tasks, a few iterations estimate the performance of the full calculation very well. For example, run only a few electronic or ionic self-consistency steps (without reaching full convergence). Compare the time various parallelization setups need to perform these few iterations.

Try to get as close as possible to the actual system. Specifically, use the same or a very similar physical system (atoms, cell size, cutoff, ...); run on the target computational hardware (CPUs, interconnect, number of nodes, ...). If too many parameters are different, the parallel configuration may not be transferable to the production calculation.

In our experience, VASP yields the best performance by combining multiple parallelization options because the parallel efficiency of each level drops near its limit. By default, VASP distributes the number of bands (NBANDS) over the available MPI ranks. But it is often beneficial to add parallelization of the FFTs (NCORE), parallelization over k points (KPAR), and parallelization over separate calculations (IMAGES). Additionally, there are some parallelization options for specific algorithms in VASP, e.g., NOMEGAPAR for parallelization over imaginary frequency points in [math]\displaystyle{ GW }[/math] and RPA calculations. In summary, VASP parallelizes with

- [math]\displaystyle{ \text{total ranks} = \text{ranks parallelizing bands} \times \text{NCORE} \times \text{KPAR} \times \text{IMAGES} \times \text{other algorithm-dependent tags}. }[/math]

To optimize the parallelization, follow this recipe:

- Create a list of the relevant parallelization INCAR tags for the specific calculation. Read the documentation for each of the relevant tags to understand the limits and reasonable choices.

- For any calculation involving electronic minimization, it can be useful to first run VASP with ALGO=None set in the INCAR file. With ALGO=None the computational setup for the electronic minimization is done without actually performing the minimization. For instance, the FFTs are planned, and the irreducible k points of the first Brillouin zone are constructed. Therefore, some parameters, e.g., the default number of Kohn-Sham orbitals (NBANDS) and the total number of plane waves, are written to the OUTCAR file while using barely any computational time.

- Combine the information from the documentation and the dry run into a few possible candidates for a reasonable setup. Run test calculations on a subset of the production run e.g. by reducing the number of steps.

- Run the production calculation with the best performing setup.

For the common case of electronic minimization calculations, the following rules of thumb apply:

- Aim to set the number of ranks to the default value of NBANDS divided by a small integer. Note that VASP will increase NBANDS to accommodate the number of ranks.

- Choose NCORE as a factor of the cores per node to avoid communicating between nodes for the FFTs. Mind that NCORE cannot be set with OpenMP threading and/or the OpenACC GPU port.

- The k-point parallelization is efficient but requires additional memory. Given sufficient memory, increase KPAR up to the number of irreducible k points. Keep in mind that KPAR should factorize the number of k points.

- For bulk systems with small unit cells (NBANDS is small, NKPTS=no of k points is large),

NCORE = 1andKPAR = NKPTSis optimal. - For running on GPUs, you may try

- MPI ranks = no of GPUs

KPAR = no of GPUsif memory allows- OpenMP threading can be quite important for the parts that still run on the host, because usually no of GPUs is rather small.

- Finally, use the IMAGES tag to split several VASP runs into separate calculations. The limit is dictated by the number of desired calculations.

Related tags an articles

Parallelization, KPAR, NCORE, KPAR, IMAGES